Cartoon Magic Mirror

17 December 2024

Hello hello hello

hello

this past semester i decided to take a VR/AR independent study class led by Joe Geigel. and I decided to work on real-time facial motion capture: taking a real-time video feed of a face, extracting facial motion features from that video, and mapping those motions onto a virtual avatar.

this was the final result:

now, there are a lot of state-of-the-art tools available for mapping facial capture data onto 3D avatars - Unreal Metahumans with LiveLink, Apple's Memoji, and Meta Quest Pro facial capture, to name a few. and they're all really impressive technical achievements! the fact they're able to function AT ALL is astounding.

but

this is due to a concept called the uncanny valley. in short, the facial capture is very close to being realistic - close enough that our brains register the captured faces as human faces - but it's not quite perfect and we're able to tell that something is off. and that conflict creates a fear reaction.

which is not ideal if you're trying to use facial capture for virtual acting, or as a VTuber, or in a virtual world like ARChat. you want an avatar that's engaging and charasmatic, not creepy.[1]

i'm sure there's plenty of research ongoing by Apple and Epic and Facebook and other companies to push through the uncanny valley and make 3D-modeled virtual faces that move indistinguishably from real faces. entire teams of world-class experts are working on this. i can't compete with that. i'm 24 and a student and i'm not getting paid to work on this for years on end[2].

but, there's another solution to the creepiness problem: what if, instead of trying to push through the uncanny valley, we step back from it? how much expressiveness can we get from an avatar that's explicitly trying to look unrealistic?

enter, the hand-animated magic mirror, with a variety of expressions:

it also supports blinking and mouth open/close detection, which allows for rough lipsyncing.

all of that facial motion is being driven by my face in real time! i did all the programming and all the research and all the art, by myself, over the course of a single semester. and in the rest of this post i'll explain how i implemented it and how the final version works.

Background

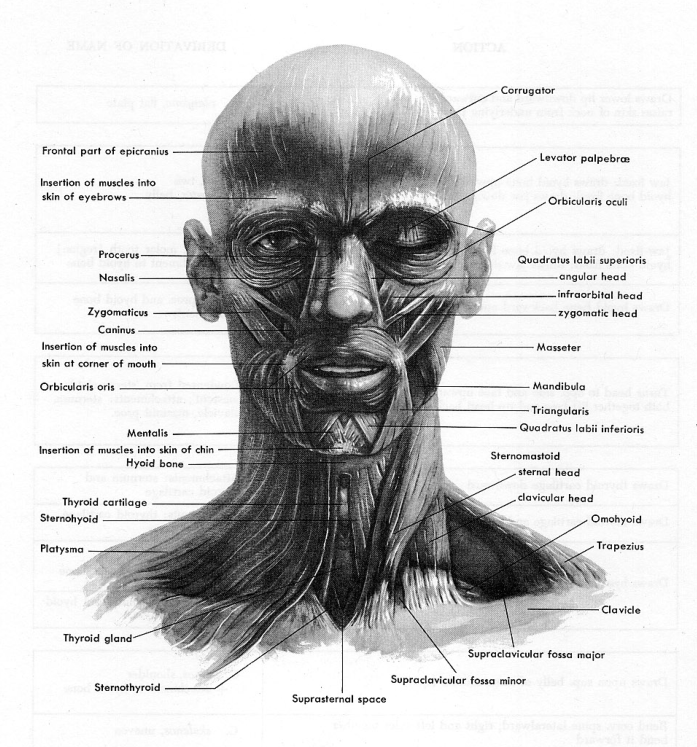

before i get into the technical details of this project, we should explore the question: how do facial expressions work? we can all recognize when somebody is happy or sad or angry by looking at them, but what makes a face happy? or sad, or angry?

well, muscles!

the face is comprised of skin and muscles[citation needed], and every single expression that a person can make occurs because some combination of muscles tenses and other muscles relax. and, if you can record the value of each muscle movement, you can reconstruct the motion of the face[3].

3D facial animation software uses that fact to recreate expressions on a 3D model. artists can create a set of hand-modeled poses that represent each muscle movement, and those poses can be blended into the model in fractional increments between 0 and 1. hence, each pose is called a blendshape. complex emotions, lipsyncing, and even full-on acting can be achieved by animating blendshapes and interpolating between different blend values over time[4].

real-time facial capture a la Apple works by taking a camera feed of a human face, using computer vision to determine which muscles' blendshapes are active and which are not, then sending that blendshape data to a virtual avatar that then reconstructs the person's expression.

Emotion detection

direct mapping isn't the only option you have for processing blendshape data. you can also detect emotions from it, which is how I accomplished the emotion animation in this project.

there are different approaches you can take for real-time emotion detection, each with different tradeoffs. the most robust products use neural-network based emotion detection. there are a few off-the shelf products available that use neural nets to detect Paul Ekman's seven basic universal emotions: joy, anger, fear, surprise, sadness, contempt, and disgust. however, that ended up not being a good fit for this project. it primarily targets research applications, and as such doesn't expose information that's needed to drive a charismatic 3D model. in particular, it doesn't detect different subtypes of a particular emotion, so it can't distingiush between smiling and grinning like the final magic mirror can.

i decided to use a simple threshold-based model of emotion detection, since latency and computation speed are a much larger concern for this project exact detection accuracy. happiness is detected by whether or nor the mouthSmiling blendshape is active. grinning is detected when the mouth is smiling and also open. surprise is detected when the mouth is open, and the eyebrows are pulled back. sadness is detected when the mouth is frowning. and, anger is detected when the mouth is frowning and eyebrows are scrunched.

this approach wasn't as robust as i liked, but it worked for the small set of emotions i implemented in this version.

Results

i demoed this at RIT's Frameless exhibition, and the feedback was positive! most people liked the demo, and a few people were unimpressed, but nobody said it was creepy, so - mission accomplished! that said, the simplicity of the implementation showed its limitations when i tested it on a wide variety of people. a bunch of the booth visitors tried to do an open-mouth, teeth-bared sort of anger expression, which i hadn't implemented, so it didn't show up on the avatar. and, when I tried to implement more subtle emotions - particularly, when i tried to implement smirking - the simple threshold-based system wasn't able to detect it reliably. so whenever i expand on this project, i'll explore different methods of emotion detection that allow for detecting those more subtle expressions.

still, I'm happy with the overall result of this project! it was really cool to see people laugh and be excited at their virtual avatar. and going forwards, I'd like to expand on this so it's usable for streaming applications.

Tools

- Unity as rendering/animation engine

- Rokoko studio + ipad mini for ARKit facial capture

- OpenSeeFace to capture body position from laptop camera

- C# to convert ARKit facial capture data to discrete animation states

- Aseprite to draw character, background, and emotion animations

Footnotes

[1] it's not entirely clear to me where in the capture toolchain the breakdown is happening, but I suspect the problem is mostly with facial capture. there's a lot of nuance in peoples micro-expressions that make faces look realistic - i can tell you from painting faces that the tiniest fleck of paint can completely change the expression you see on a face - and i suspect that video capture technology isn't nuanced enough to capture all that detail yet.

[2] i am in fact paying to work on this. quite frankly i feel like the monetary flow is going in the wrong direction but i digress

[3] Paul Ekman's Facial Action Coding System is the foundational research that explored that concept scientifically, but it's not research that I used directly.

[4] and, to follow up on [1] - hand-animated blendshape facial animation can look REALLY good! so creepiness isn't an inherent problem with blendshape animation. Valve was a pioneer of doing that in videogames, and the fruits of that labor can be seen in [Team Fortress 2](https://www.youtube.com/watch?v=jHgZh4GV9G0) and [Half-Life 2](https://www.youtube.com/watch?v=7HBwxbWVb-M). the facial animation in those games is a high watermark that is difficult to see matched even today, and certainly isn't matched by real-time facial capture software.